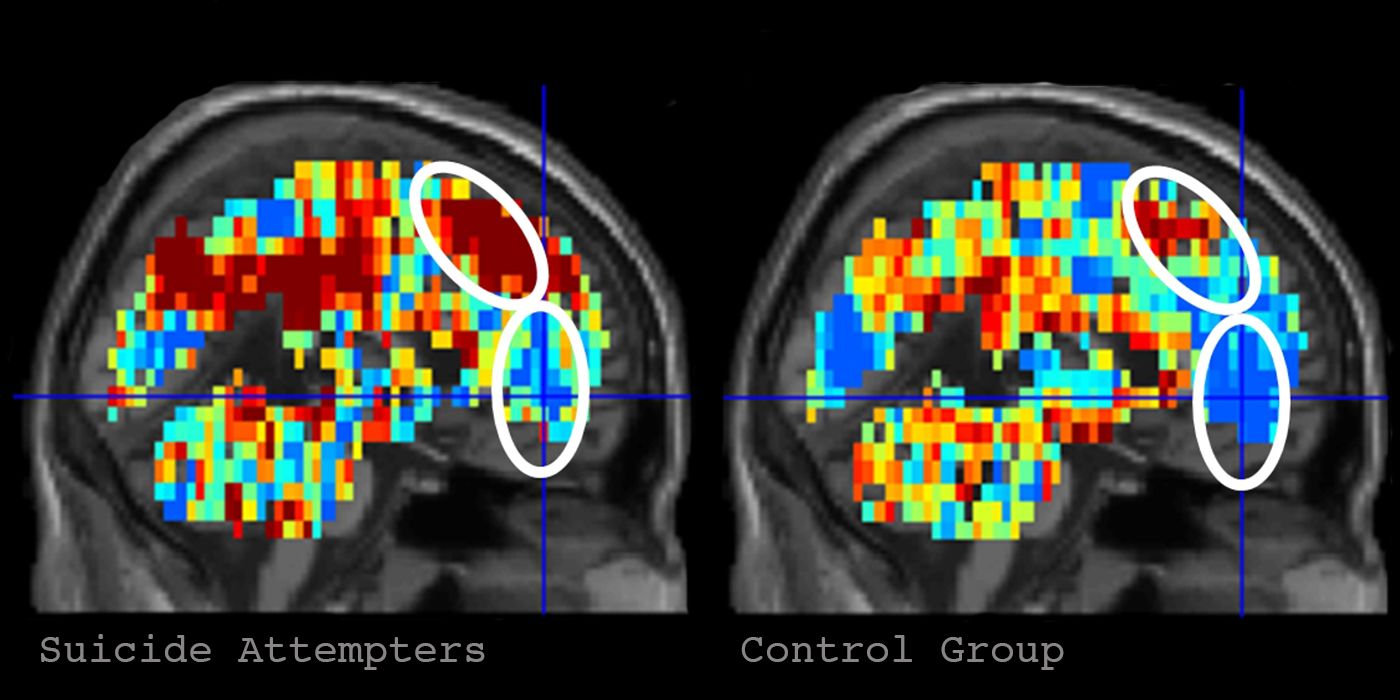

Earlier this week, the journal Nature Human Behaviour published a controversial study in which researchers claimed to be able to detect the presence of suicidal thoughts. The study used fMRI imaging to look at neural signatures for different concepts related to life and suicide, using those 'signatures' as a proxy for whether a person had suicidal ideations. The researchers trained a machine learning algorithm on that fMRI data, and, they claimed, trained it to recognize whether a person had suicidal thoughts with 91 percent accuracy.

The study was published in a prominent journal, and it involved the use of two methodologies that often draw interest and coverage from media organizations, while researchers meet that coverage with skepticism: neuroimaging and machine learning. That is to say, excitement about these two methodologies can often outshine any skepticism toward them in popular reporting.

In a blogpost on Thursday, the anonymous writer Neurocritic broke down some of those points of skepticism. Chief among them, the researchers eliminated a number of participants from their experiment due to "poor data quality" (it's worth noting that the researchers themselves stress both in the paper and in interviews the need to replicate their findings with larger sample sizes in the future).

Another piece that researchers online, and Neurocritic as well, are raising as a point of question is the machine learning component of the study. The researchers tried to teach their machine-learning algorithm how to recognize suicidal thoughts through a procedure known as "leave-one-out cross validation." They would run trials in which the algorithm would be fed data all of the participants except for one, that is the algorithm would be 'shown' the participant's fMRI data and 'told' whether or not that participant had suicidal thoughts. Then, the algorithm would be fed the fMRI data from the person who was left out, but the algorithm would not be 'told' whether that person had suicidal thoughts.

The algorithm's ability to classify those left-out individuals was the measure of its accuracy—that's where the 91 percent figure comes from.

One neuroscientist who specializes in machine learning tweeted out an interesting parallel. Let's say a researcher shows a group of squeamish people a violent video and monitors their pulse. That researcher then trains a machine learning algorithm to classify squeamish people based of their pulse rate: does that make pulse rate a biological marker for squeamishness?

And then there's the particularly prickly point at which accuracy meets ethics. Assuming this technology could one day work perfectly, there is the ethical question of whether it is right to put someone through a procedure that would reveal their thoughts. It's hard to imagine a convincing argument that preventing suicide, even against an attempter's will, would be unethical. It's worth noting that Marcel Just, the author of the study, repeatedly emphasized that this paper is miles away from creating a predictor of suicidality, although that is a subtext media outlets have latched on to.

But if the possibility of mechanism like this that is not 100 percent, always, all of the time correct and applied to a person regardless of their will is particularly chilling.

SaveSave

SaveSave

Uncommon Knowledge

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

About the writer

Joseph Frankel is a science and health writer at Newsweek. He has previously worked for The Atlantic and WNYC.